Hey @marcosmarxm, as per the tutorial I have exported the generated normalization.

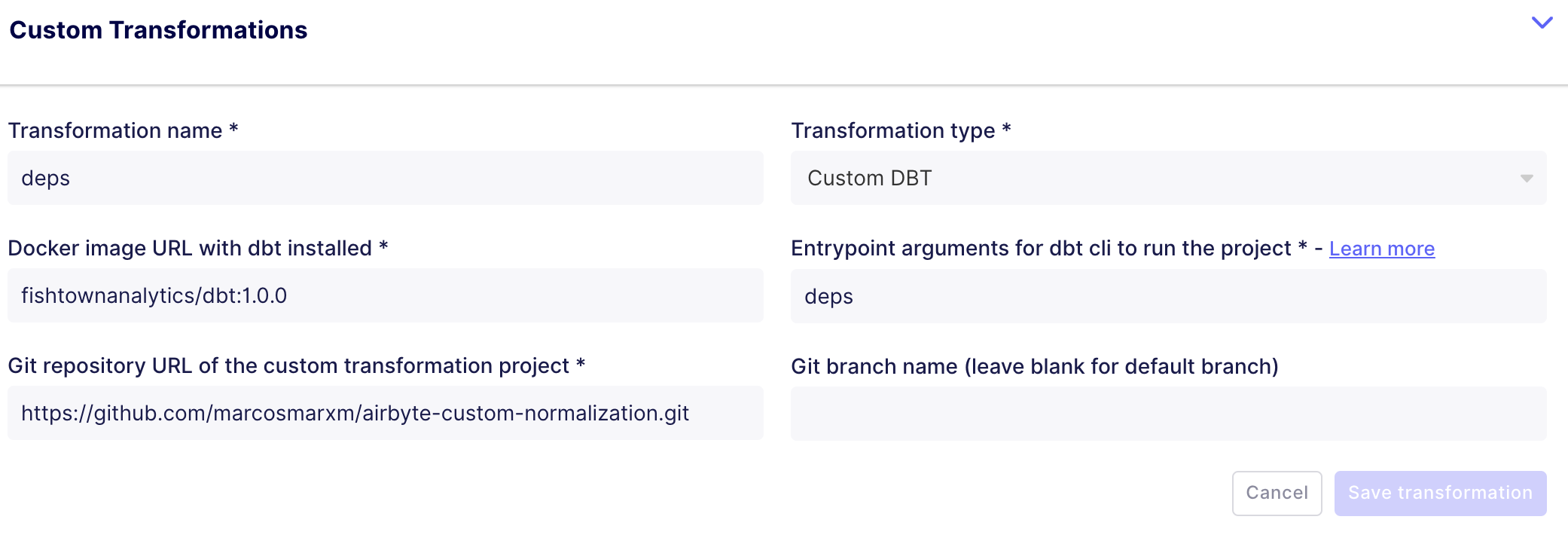

Executing deps…created dependencies in the /dbt folder

dbt deps --profiles-dir=. --project-dir=.

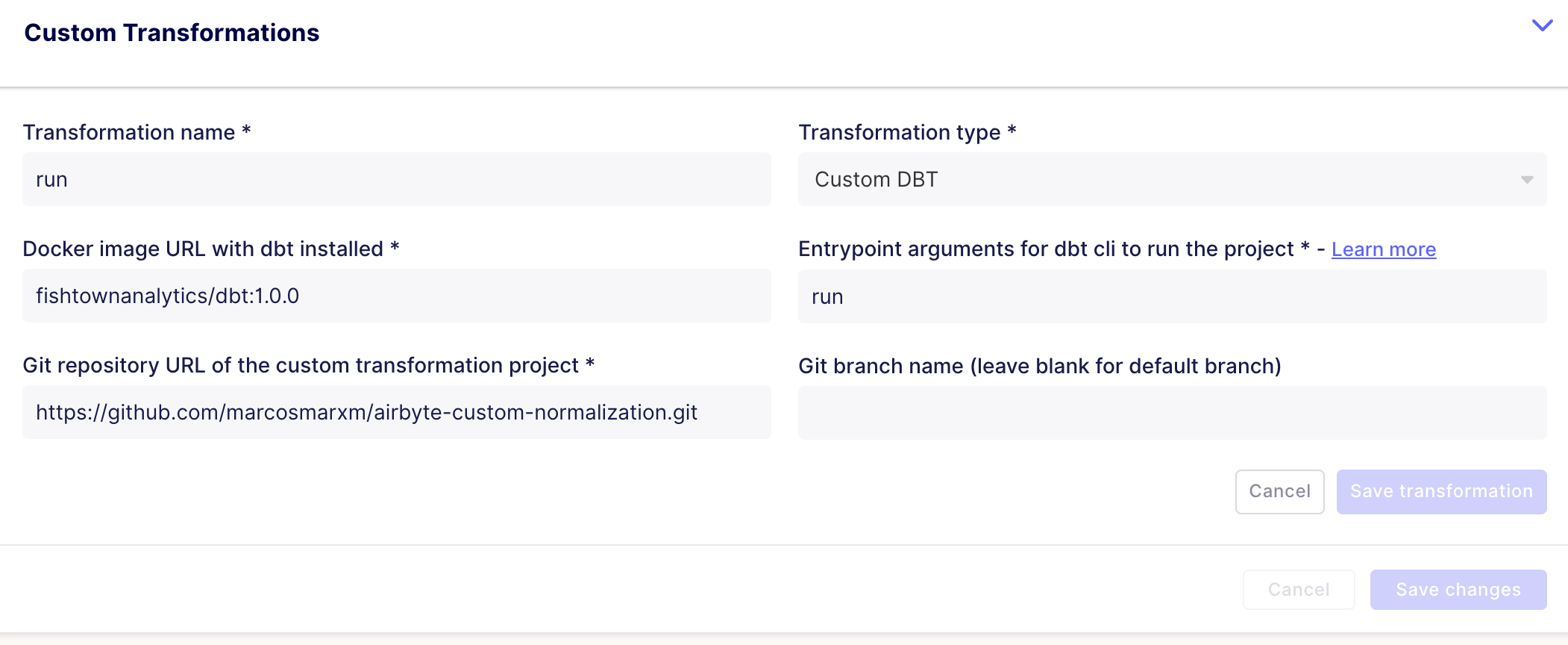

Executing run generated models successfully

dbt run --profiles-dir=. --project-dir=.

Created a github dbtproject with the exported normalization structure to check the customized transformation.

But it failed with the error:

2022-04-16 03:34:23 dbt > Running from /data/95/0/transform/git_repo

2022-04-16 03:34:23 dbt > detected no config file for ssh, assuming ssh is off.

2022-04-16 03:34:23 dbt > Running: dbt run --profiles-dir=/data/95/0/transform --project-dir=/data/95/0/transform/git_repo

2022-04-16 03:34:30 dbt > 03:34:30 Running with dbt=1.0.0

2022-04-16 03:34:30 dbt > 03:34:30 Encountered an error:

2022-04-16 03:34:30 dbt > Compilation Error

2022-04-16 03:34:30 dbt > dbt found 1 package(s) specified in packages.yml, but only 0 package(s) installed in /dbt. Run “dbt deps” to install package dependencies.

2022-04-16 03:34:31 INFO i.a.w.t.TemporalAttemptExecution(lambda$getWorkerThread$2):158 - Completing future exceptionally…

io.airbyte.workers.WorkerException: Dbt Transformation Failed.

at io.airbyte.workers.DbtTransformationWorker.run(DbtTransformationWorker.java:57) ~[io.airbyte-airbyte-workers-0.36.0-alpha.jar:?]

at io.airbyte.workers.DbtTransformationWorker.run(DbtTransformationWorker.java:16) ~[io.airbyte-airbyte-workers-0.36.0-alpha.jar:?]

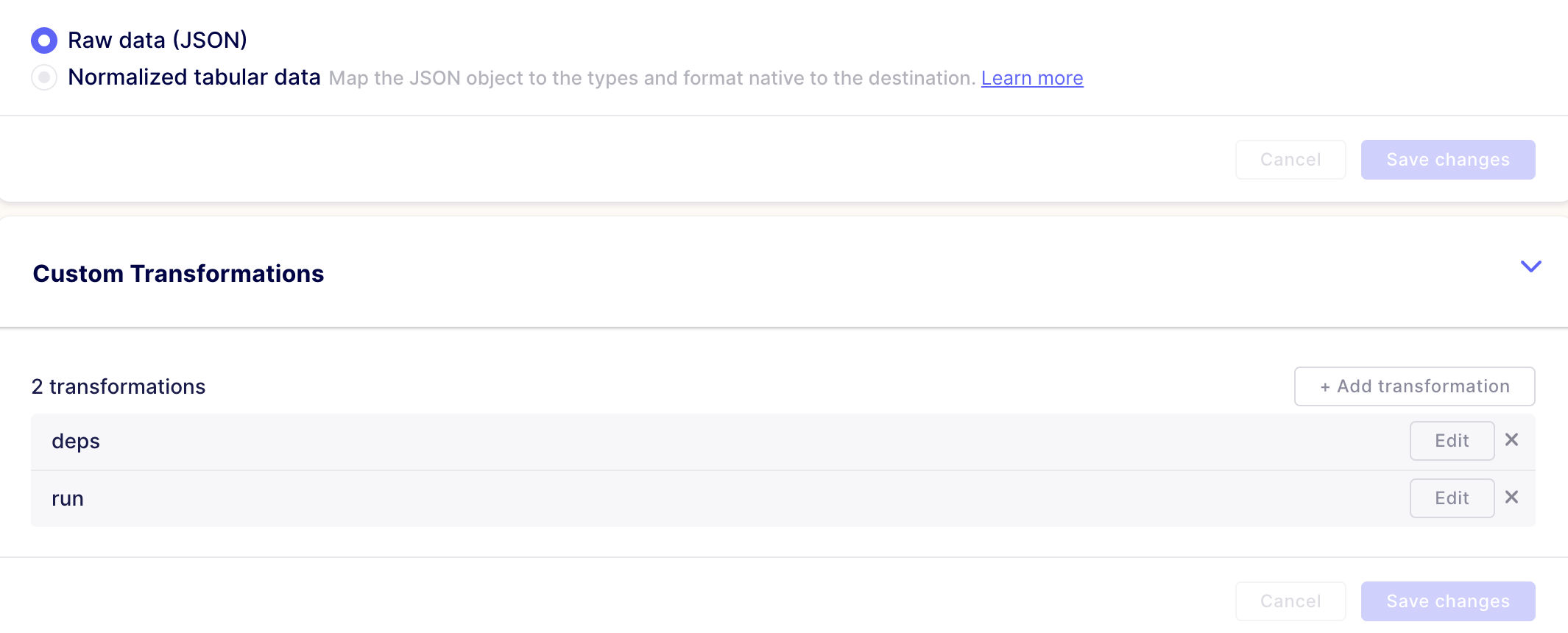

I have tried adding additional transformation step to install deps

still the same error.

Could not find the location in docker container where the dbt deps installed and why run command could not find it ?

Can you please advise ?