- Is this your first time deploying Airbyte?: No

- OS Version / Instance: Amazon Linux 2/ EC2

- Memory / Disk: 8GiB/50GB

- Deployment: Docker

- Airbyte Version: 0.35.31-alpha

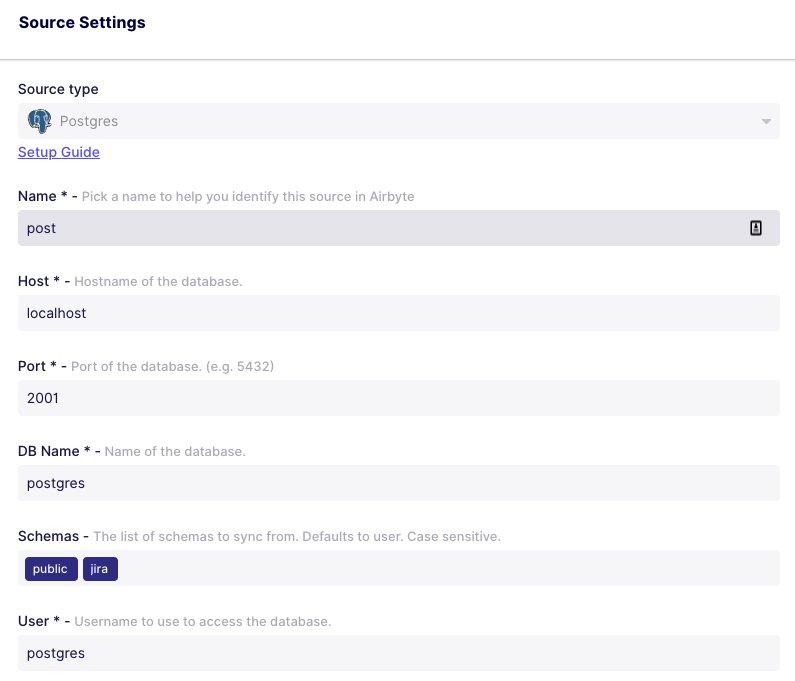

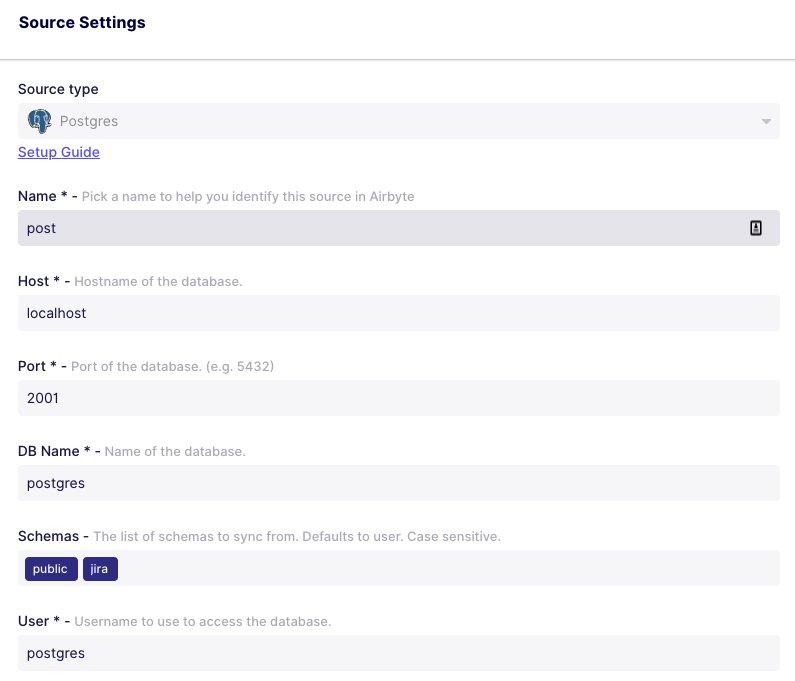

- Source name/version: Postgres/0.4.4

- Destination name/version: BigQuery/1.0.2

- Step: Creating the connection

- Description:

— What’s working—

- I can successfully create a connection to Postgres on RDS

- I can successfully create a connection to BigQuery on GCP

— The issue —

When trying to connect the two, the process gets stuck on schema detection. Twice, after a couple of hours, it’s returned a “failed to detect schema” message, but every other time it gets stuck showing “Please wait a bit more…”

What’s more baffling is that I already had the connection working, but I deleted it to start afresh. Nothing’s worked since then.

I have also tried having an S3 bucket as the destination, still same problem.

There are no error logs available.

I’m using Postgres 0.4.4. since it’s the version of the connector that I’ve been able to consume CDC logs for another DB

@kelvingakuo can you capture the API response for the discovery_schema call?

Open the developer tools > network tab

Unfortunately the API call never returns. It’s been stuck for close to 3hrs now. I had to docker-compose down then docker-compose up to at least see the API call. But there’s a “failed to load resource” error in the console

For context, the Postgres DB I’m connecting to has 9 schemas, 24 tables, with the largest table being 125MB (1.4M rows). Excluding the schema with that table doesn’t seem to have any effect.

It’s finally failed!!

Ignore the “Cannot reach server” error. The SSH tunnel broke for a bit.

Edit: I had even tried having the source use CDC with wal2json with no luck

MAJOR UPDATE

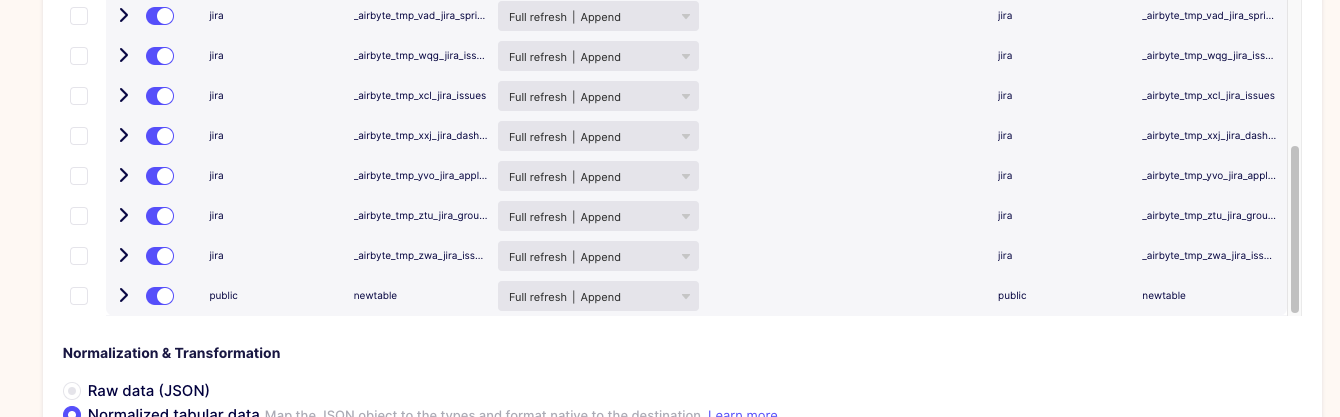

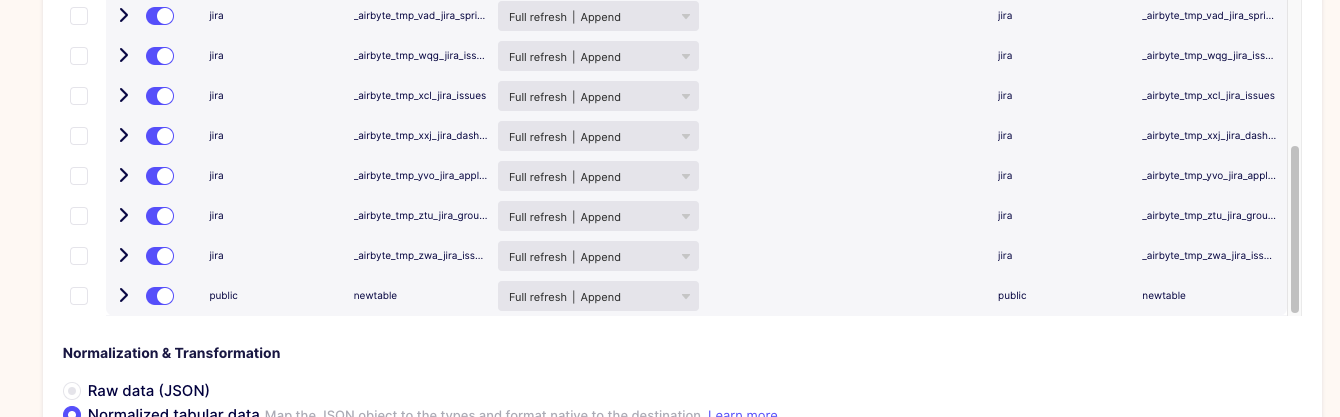

It just hit me that the first time I had a working connection, it was syncing from the “public” schema only. I’ve tried changing the source setup to the “public” schema only, and it works!!! @marcosmarxm

Interestingly, just before running into all these issues, the connection was working with the source using all schemas

1 Like

Sorry the long delay @kelvingakuo I was OOO. Is it working now?

Hey @marcosmarxm ,

No problemo! It is working, but only if the Postgres connector is using the public schema ONLY

I think I have the same problem. Can only see public schema for my PSQL.

@marcosmarxm any thoughts?

Airbyte version: 0.35.60-alpha

PSQL connector: 0.4.4

Using docker compose, but issue is also present on our k8s deployment.

Here are relevant parts of my logs (can provide further logs if required):

airbyte-worker | 2022-04-14 11:05:54 INFO i.a.w.t.TemporalAttemptExecution(get):110 - Executing worker wrapper. Airbyte version: 0.35.60-alpha

airbyte-worker | 2022-04-14 11:05:54 INFO i.a.c.i.LineGobbler(voidCall):82 - Checking if airbyte/source-postgres:0.4.4 exists...

airbyte-worker | 2022-04-14 11:05:54 INFO i.a.c.i.LineGobbler(voidCall):82 - airbyte/source-postgres:0.4.4 was found locally.

airbyte-worker | 2022-04-14 11:05:54 INFO i.a.w.p.DockerProcessFactory(create):106 - Creating docker job ID: 4591267a-c9a3-444e-8235-3fcb33a5eccb

airbyte-worker | 2022-04-14 11:05:54 INFO i.a.w.p.DockerProcessFactory(create):158 - Preparing command: docker run --rm --init -i -w /data/4591267a-c9a3-444e-8235-3fcb33a5eccb/0 --log-driver none --network host -v airbyte_workspace:/data -v /tmp/airbyte_local:/local -e WORKER_CONNECTOR_IMAGE=airbyte/source-postgres:0.4.4 -e WORKER_JOB_ATTEMPT=0 -e WORKER_ENVIRONMENT=DOCKER -e AIRBYTE_ROLE= -e AIRBYTE_VERSION=0.35.60-alpha -e WORKER_JOB_ID=4591267a-c9a3-444e-8235-3fcb33a5eccb airbyte/source-postgres:0.4.4 discover --config source_config.json

airbyte-worker | 2022-04-14 11:05:54 ERROR i.a.c.i.LineGobbler(voidCall):82 - SLF4J: Class path contains multiple SLF4J bindings.

airbyte-worker | 2022-04-14 11:05:54 ERROR i.a.c.i.LineGobbler(voidCall):82 - SLF4J: Found binding in [jar:file:/airbyte/lib/log4j-slf4j-impl-2.16.0.jar!/org/slf4j/impl/StaticLoggerBinder.class]

airbyte-worker | 2022-04-14 11:05:54 ERROR i.a.c.i.LineGobbler(voidCall):82 - SLF4J: Found binding in [jar:file:/airbyte/lib/slf4j-log4j12-1.7.30.jar!/org/slf4j/impl/StaticLoggerBinder.class]

airbyte-worker | 2022-04-14 11:05:54 ERROR i.a.c.i.LineGobbler(voidCall):82 - SLF4J: See http://www.slf4j.org/codes.html#multiple_bindings for an explanation.

airbyte-worker | 2022-04-14 11:05:54 ERROR i.a.c.i.LineGobbler(voidCall):82 - SLF4J: Actual binding is of type [org.apache.logging.slf4j.Log4jLoggerFactory]

airbyte-worker | 2022-04-14 11:05:55 INFO i.a.w.p.a.DefaultAirbyteStreamFactory(lambda$create$0):61 - 2022-04-14 11:05:55 INFO i.a.i.s.p.PostgresSource(main):356 - starting source: class io.airbyte.integrations.source.postgres.PostgresSource

airbyte-worker | 2022-04-14 11:05:55 INFO i.a.w.p.a.DefaultAirbyteStreamFactory(lambda$create$0):61 - 2022-04-14 11:05:55 INFO i.a.i.b.IntegrationCliParser(parseOptions):118 - integration args: {discover=null, config=source_config.json}

airbyte-worker | 2022-04-14 11:05:55 INFO i.a.w.p.a.DefaultAirbyteStreamFactory(lambda$create$0):61 - 2022-04-14 11:05:55 INFO i.a.i.b.IntegrationRunner(runInternal):104 - Running integration: io.airbyte.integrations.base.ssh.SshWrappedSource

airbyte-worker | 2022-04-14 11:05:55 INFO i.a.w.p.a.DefaultAirbyteStreamFactory(lambda$create$0):61 - 2022-04-14 11:05:55 INFO i.a.i.b.IntegrationRunner(runInternal):105 - Command: DISCOVER

airbyte-worker | 2022-04-14 11:05:55 INFO i.a.w.p.a.DefaultAirbyteStreamFactory(lambda$create$0):61 - 2022-04-14 11:05:55 INFO i.a.i.b.IntegrationRunner(runInternal):106 - Integration config: IntegrationConfig{command=DISCOVER, configPath='source_config.json', catalogPath='null', statePath='null'}

airbyte-worker | 2022-04-14 11:05:55 INFO i.a.w.p.a.DefaultAirbyteStreamFactory(lambda$create$0):61 - 2022-04-14 11:05:55 WARN c.n.s.JsonMetaSchema(newValidator):338 - Unknown keyword order - you should define your own Meta Schema. If the keyword is irrelevant for validation, just use a NonValidationKeyword

airbyte-worker | 2022-04-14 11:05:55 INFO i.a.w.p.a.DefaultAirbyteStreamFactory(lambda$create$0):61 - 2022-04-14 11:05:55 WARN c.n.s.JsonMetaSchema(newValidator):338 - Unknown keyword examples - you should define your own Meta Schema. If the keyword is irrelevant for validation, just use a NonValidationKeyword

airbyte-worker | 2022-04-14 11:05:55 INFO i.a.w.p.a.DefaultAirbyteStreamFactory(lambda$create$0):61 - 2022-04-14 11:05:55 WARN c.n.s.JsonMetaSchema(newValidator):338 - Unknown keyword airbyte_secret - you should define your own Meta Schema. If the keyword is irrelevant for validation, just use a NonValidationKeyword

airbyte-worker | 2022-04-14 11:05:55 INFO i.a.w.p.a.DefaultAirbyteStreamFactory(lambda$create$0):61 - 2022-04-14 11:05:55 WARN c.n.s.JsonMetaSchema(newValidator):338 - Unknown keyword multiline - you should define your own Meta Schema. If the keyword is irrelevant for validation, just use a NonValidationKeyword

airbyte-worker | 2022-04-14 11:05:55 INFO i.a.w.p.a.DefaultAirbyteStreamFactory(lambda$create$0):61 - 2022-04-14 11:05:55 INFO i.a.i.b.s.SshTunnel(getInstance):170 - Starting connection with method: NO_TUNNEL

airbyte-worker | 2022-04-14 11:05:56 INFO i.a.w.p.a.DefaultAirbyteStreamFactory(lambda$create$0):61 - 2022-04-14 11:05:56 INFO i.a.i.s.j.AbstractJdbcSource(discoverPrimaryKeys):203 - Discover primary keys for tables: []

airbyte-worker | 2022-04-14 11:05:56 INFO i.a.w.p.a.DefaultAirbyteStreamFactory(lambda$create$0):61 - 2022-04-14 11:05:56 INFO i.a.i.s.p.PostgresSource(isCdc):253 - using CDC: false

airbyte-worker | 2022-04-14 11:05:56 INFO i.a.w.t.TemporalAttemptExecution(get):131 - Stopping cancellation check scheduling...

airbyte-server | 2022-04-14 11:05:56 INFO i.a.s.RequestLogger(filter):112 - REQ 172.19.0.5 POST 200 /api/v1/sources/discover_schema - {"sourceId":"944a0fac-1bf0-4125-80f9-a0240fbf621f"}

additional perhaps relevant context is that my DB is hosted on GCP, and I’m using their CloudSQL proxy to access it. no SSH. am a bit of a noob though so apologies if missed something obvious.

Kevin I’m using 0.4.4

I was able to sync data from 2 different schemas when specify in the connector params.

I also had it working the very first time. But then once I deleted the first connection and tried to re-create, it did not work. I ended up re-working my flow to use the public schema

Ok, let me know if you need any further assistance here.

Hi there from the Community Assistance team.

We’re letting you know about an issue we discovered with the back-end process we use to handle topics and responses on the forum. If you experienced a situation where you posted the last message in a topic that did not receive any further replies, please open a new topic to continue the discussion. In addition, if you’re having a problem and find a closed topic on the subject, go ahead and open a new topic on it and we’ll follow up with you. We apologize for the inconvenience, and appreciate your willingness to work with us to provide a supportive community.